The Deceptive Difficulty of Splatoon 3 Gear Optimization

Splatoon 3 gear optimization hides surprising complexity: 160B possible builds, non-linear stacking, and strategic noise challenging ML models.

Hidden Complexity in Splatoon’s Gear System

Nintendo's Splatoon series is one of contradictions. At first glance, its vibrant, cartoony aesthetic suggests simple, kid-friendly fun. Yet beneath that cheerful exterior lies remarkable depth and complexity across its interconnected gameplay systems. Nothing illustrates this better than the gear building system. On the surface, it functions as a form of self-expression, allowing players to build fun outfits for their character to wear during battle. Indeed, many players become quite attached to their uniquely customized avatars. However, hidden beneath this playful facade is a system of surprising strategic depth where choices can decisively shape gameplay outcomes. It is precisely this hidden complexity that makes it a fascinating and formidable challenge, especially for automated Machine Learning approaches attempting to discover the "perfect" build.

Splatoon’s Gear System Explained

Released in 2022, Splatoon 3 is Nintendo's wildly successful competitive team-based shooter, where players compete in fast-paced battles over territory and objectives. Before entering a match, players face a critical strategic choice that shapes their character's effectiveness in battle: their gear.

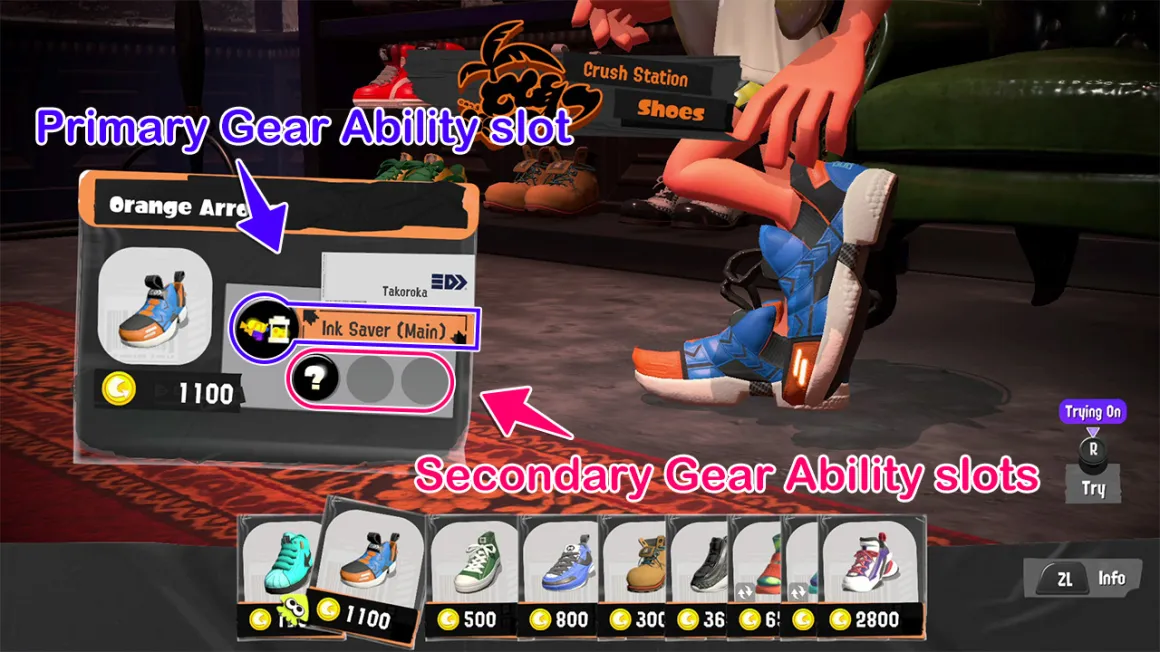

You can think of Splatoon's gear system as choosing your character's outfit for battle, composed of three distinct types: headgear, clothing, and shoes. Each piece of gear contains one high-impact primary (main) ability slot and three smaller secondary (sub) ability slots. Strategic depth arises from the existence of powerful, build-defining abilities restricted exclusively to the main slot of specific gear types. For example, the versatile Comeback ability, only available as a main ability on headgear, is strong enough to become the centerpiece around which a player's entire strategy revolves.

This elegant setup is intuitive enough for younger players to grasp easily while simultaneously introducing a deceptively intricate combinatorial puzzle, one challenging even for seasoned veterans. This poses a particularly fascinating yet formidable problem for optimization for both man and machine; a challenge so formidable that entire websites have been built just to help players advise each other on the most effective builds.

The Weapon-Gear Relationship

While gear provides abilities to adjust your character's performance, the foundation of every loadout is your weapon choice. This is typically the first (arguably the most impactful) decision a player makes, as the weapon defines fundamental aspects of gameplay, providing both powerful strengths that gear can enhance as well as notable weaknesses that gear can compensate for. Splatoon organizes its weapons within a clear hierarchy:

- Weapon Class (e.g. Shooter, Roller, Charger)

- Defines the core movement mechanics, attack patterns, and overall gameplay archetype.

- Specific Weapon (e.g., Splattershot, Carbon Roller, E-liter 4K)

- Dictates precise weapon statistics and handling characteristics, shaping the nuances of play.

- Weapon Kit (e.g., Splattershot vs. Tentatek Splattershot)

- Includes a specific pairing of a secondary (sub) weapon, such as a bomb or utility, and a powerful special weapon, comparable to ultimates in other competitive games.

- Shapes gear optimization even between variants of the same specific weapon.

This structured design both simplifies and complicates gear selection. On one hand, similar weapons kits often allow interchangeable gear builds, enabling players to experiment without too much investment. On the other hand, subtle differences between even closely related weapon kits might require entirely different gear setups.

The Sheer Size of the Search Space

Each piece of gear has one main slot and three sub slots. Headgear has four main-slot-only abilities tied to it, Clothing has five, and Shoes has three. This leads to a massive search space of 1.3 billion possible gear options (1,262,451,960 exactly). There's also 130 unique weapon kits, which balloons the figure up to over 160 billion possibilities (164,118,754,800 exactly). Given that the largest repository of data (stat.ink) has, as of May 2025, about 15 million recorded battles, each involving 8 players, this translates to a maximum representation of approximately 120 million gear instances. Even in the highly optimistic scenario where each instance represents a unique gear combination, this coverage is still three orders of magnitude smaller than the total search space. This is what makes the problem especially difficult for both players and algorithms alike.

The Intricacies of Ability Stacking

Much of the strategic depth arises directly from the ability stacking mechanics. Splatoon quantifies the investment in each ability using an internal point system, often colloquially referred to as Ability Points (AP) where the different slot types carry distinct weights:

- A main ability slot grants a hefty 10 AP towards that ability, offering a substantial boost.

- A sub ability slot grants a comparatively modest 3 AP, offering smaller, but cumulatively significant, gameplay effects.

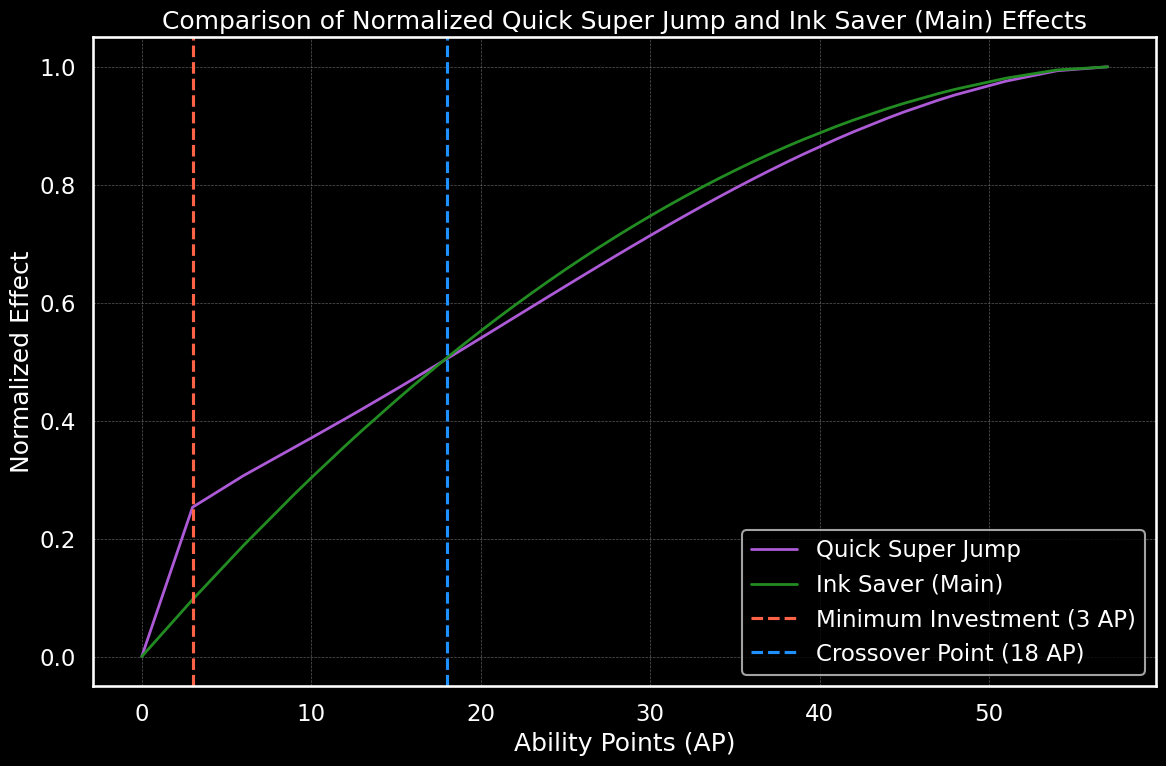

Critically, each ability also follows a unique, non-linear effectiveness curve, typically characterized by diminishing returns as the total AP invested increases:

This non-linear scaling introduces key strategic consequences:

- Utility Subs: Some abilities provide a significant benefit with minimal investments (often just 3 AP), making them efficient and valuable additions to many builds. This specific phenomenon presents the biggest hurdle for traditional ML approaches: is a single sub-slot inclusion strategically optimal, or simply random noise? More on this later.

- Stacking-Dependent Abilities: Other abilities offer negligible benefit at low AP investments, becoming effective only when heavily stacked, making them powerful but niche options.

This interplay transforms gear building into a complex resource allocation puzzle, as the optimal choice for each ability depends on:

- The effectiveness of the ability at the current level of investment.

- The opportunity cost of investing AP elsewhere.

- Synergies with other abilities in the build.

- Compatibility with the equipped weapon.

- Individual playstyle considerations.

Like a snowball rolling downhill, these small but important pieces of the system compound, creating significant difficulty for both players and algorithms to properly optimize gear while taking everything into consideration.

Data Complexity and Noise

As if the system wasn't complex enough already, there's one final wrinkle making the optimization problem particularly difficult to solve: noise. While Splatoon 3 reduces randomness significantly compared to its predecessors, there remain four distinct layers of noise that complicate the interpretation of gathered data:

- Random Ability Assignment: Gear sub-slots are initially filled based on probability distributions tied to each gear's "brand". Since brand information is typically excluded from real-world datasets, baseline observations inherently contain unpredictable elements that are not easy to compensate for, if possible at all.

- Optimization Costs: Crafting a min-maxed gear set demands substantial time and in-game resources. Consequently, many observed loadouts may represent incomplete optimizations, practical compromises ("good enough" builds), or gear setups recycled across multiple weapons and evolving competitive landscapes (the broader competitive context known as the metagame), despite being suboptimal.

- Player Expertise Distribution: Gear data aggregates choices from players with widely varying game knowledge and priorities. Some choices represent deep, strategic understanding by experienced players; others reflect aesthetic preferences, outdated practices, limited knowledge of current community best practices, or experimental off-meta strategies by skilled players that may not prove viable.

- Multimodality and Meta Evolution: Many weapons support multiple viable builds, making it challenging to identify a singular "optimal" choice. Conversely, certain weapons have clearly defined niches with straightforward optimal builds and broad consensus. Additionally, continuous meta shifts mean previously optimal gear configurations can become outdated or suboptimal over time.

These factors combine to create substantial ambiguity in the data. Determining whether a single sub-slot ability (such as Quick Super Jump) is strategically optimal, randomly assigned, part of an incomplete setup, or intentionally suboptimal is a non-trivial challenge. Without careful consideration of context, what appears to be irrelevant "noise" could in fact be essential strategic signal, posing a formidable challenge for conventional machine learning approaches.

Why Conventional ML Models Struggle

These layers of noise and system complexity underscore a fundamental requirement for gear optimization: context. Interpreting any individual gear choice, like that lone Quick Super Jump sub-slot mentioned earlier, is impossible in isolation. Is it:

- An optimal "utility sub," carefully chosen to complement the specific weapon kit?

- A randomly assigned ability yet to be scrubbed or overwritten?

- Part of a gear piece primarily optimized for a different weapon or strategy?

- An intentional, albeit suboptimal, choice reflecting a niche strategy or personal preference?

The correct interpretation hinges entirely on interacting factors: the chosen weapon kit, the complete set of equipped abilities across the loadout, the prevailing competitive meta, and potentially unobservable factors like player intent or resource constraints.

This deep contextual dependency is exactly why conventional machine learning approaches struggle here. Standard techniques often identify noise through statistical deviations or treat features as somewhat independent variables. They might assume outliers represent errors to filter out, or that frequently occurring patterns inherently represent the primary signal. In the Splatoon gear system, however, these assumptions quickly break down. An apparent outlier (an uncommon ability choice) might be a highly effective niche strategy in the right context—crucial signal disguised as noise. Conversely, blindly copying the most common builds can lead to suboptimal setups if key contextual nuances are overlooked, especially when dealing with multimodality.

Models not explicitly designed to handle set-based inputs (where order doesn't matter, but combinations do), non-linear interactions, and deep contextual dependencies simultaneously, are fundamentally ill-equipped for this task. Simply averaging popular builds or filtering perceived noise, the approach many community resources currently take because it’s straightforward, cannot meaningfully optimize loadouts. Instead, we need an approach capable of capturing the holistic interplay between every element of a loadout within its specific gameplay environment.

Model Comparisons

| Model family | Handles set-based inputs (order-invariant) | Learns non-linear feature interactions | Captures deep contextual dependencies |

|---|---|---|---|

| Linear / Logistic Regression | None | None | None |

| Naive Bayes | Full (bag-of-words) | None | None |

| k-Nearest Neighbors | None | Full | None |

| Support-Vector Machine (with kernels) | Partial (needs a permutation-invariant kernel) | Full | None |

| Decision Trees | None | Full | Partial (shallow, path-wise) |

| Random Forest | None | Full | Partial (ensemble of shallow paths) |

| Gradient-Boosted Trees (e.g. XGBoost) | None | Full | Partial (layered shallow paths) |

| Multi-Layer Perceptron (feed-forward NN) | None | Full | Partial (only if context is fixed in the input window) |

| DeepSets | Full | Partial (implicit via pooled sum) | Partial (global but not element-wise context) |

| Sequence Transformers (e.g. GPT, BERT) | None (order-aware via positional encoding) | Full | Full |

| Set Transformers | Full | Full | Full |

This makes Set Transformers the most attractive option to address this problem, which we'll address in the next part.

The Road Ahead

We've journeyed through the intricate layers that make Splatoon 3 gear optimization such a deceptively difficult challenge. From the non-linear scaling of abilities and deep weapon dependencies to the ambiguous noise embedded in player data, it's clear that context is paramount. Conventional machine learning methods, often reliant on simpler assumptions about data independence or clearly separable signals, aren't designed to navigate this nuanced, combinatorial landscape effectively.

But complexity doesn't mean impossibility; it simply calls for a more specialized solution. In the next post (Part 2) of this series, I'll introduce SplatGPT, a deep learning model specifically built to handle these intricate challenges, leveraging architectures optimized for set-based inputs and deep contextual awareness.

Yet building the model is just the beginning. The deeper—and potentially more exciting—question is: how exactly does it make these optimization decisions? In Part 3, I'll dive into interpretability, showing how I've trained a monosemantic Sparse Autoencoder (SAE) on SplatGPT to uncover thousands of internal neurons that map neatly onto distinct strategic concepts. Given the scale of these neurons, I'm using doc2vec embeddings and automatic TF-IDF-based labeling to rapidly identify, label, and interpret these monosemantic features.

Finally, in Part 4, we'll push beyond passive interpretation into active collaboration. Using custom PyTorch hooks, I'll demonstrate how we can actively steer the gear completion process. Rather than passively accepting whatever optimal build the model generates, we'll be able to influence the direction it takes, nudging the optimization toward specific strategic goals: whether that's an aggressive push, a more defensive playstyle, or even entirely novel, niche configurations. By visualizing builds before optimization, after standard optimization, and following targeted steering, we'll unlock an entirely new dimension of control, turning SplatGPT into an interactive partner in strategic gear-building.

Join me as we move from clearly defining the complexity of gear optimization, to understanding the intricacies of an advanced AI solution, and finally, actively collaborating with that solution to build gear tailored precisely to specific strategic intentions in the rich, nuanced world of Splatoon 3.